Detection Engineering stages of maturity, getting the most out of your SIEM, a story over time

Tuesday, November 14, 2023

Detection Engineering stages of maturity: A Story

Friday, September 10, 2021

Siem Rule - IP Lookup Service

Malware IP lookup service #siem detection rule idea

dns request in:

- canireachthe.net

- ipv4.icanhazip.com

- ip.anysrc.net

- edns.ip-api.com

- wtfismyip.com

- checkip.dyndns.org

- api.2ip.ua

- icanhazip.com

- api.ipify.org

- ip-api.com

- checkip.amazonaws.com

- ipecho.net

- ipinfo.io

- ipv4bot.whatismyipaddress.com

- freegeoip.app

imagename not in

- brave.exe

- iexplore.exe

- opera.exe

- firefox.exe

- msedge.exe

- chrome.exe

- vivaldi.exe

Tuesday, October 6, 2020

70 IBM Qradar SIEM Tips!

https://twitter.com/neonprimetime/status/1313222901236142080

Hey for all you #infosec friends stuck with #ibm #qradar just like me, just remember it’s still better than having no #siem at all. Here my contribution to the community, a mega thread of qradar tips to improve your life

#qradartips

0/N

Qradar Tip #1

equals is case sensitive

username equals 'neonprimetime'

will not find 'Neonprimetime'

(notice the capital N)

from the GUI use contains to be case insensitive!

#qradartips 1/N

Qradar Tip #2

avoid using the GUI for filtering

instead teach yourself AQL

use the "Advanced Search" drop down

it's a powerful SQL-like language

that will allow you to performance tune queries

use complex boolean logic

and much more!

https://www.ibm.com/support/knowledgecenter/SS42VS_7.3.3/com.ibm.qradar.doc/b_qradar_aql.pdf

#qradartips 2/N

Qradar Tip #3

offenses will get purged after a period of time

configure the retention under system settings

or if it's a major incident use the "Actions->Protect"

to permanently save an offense so it never gets deleted

#qradartips 3/N

Qradar Tip #4

be aware qradar has a max number of offenses it will create

after that it just stops creating them

keep your queue below 2500

notice if one particular alert goes nuts it could prevent your SOC from seeing any new offenses

https://www.ibm.com/support/knowledgecenter/en/SS42VS_7.3.2/com.ibm.qradar.doc/38750050.html

#qradartips 4/N

Qradar Tip #5

if you always filter on the same property (rules, reports, dashboards, aql) then index that field, if you don't index performance will be terrible (similar to indexing in a SQL db)

check the "Parse in advance for rules, reports, and searches" box

#qradartips 5/N

Qradar Tip #6

qradar coalescing is dangerous, you lose logs!

(event count > 1)

if event, ip, port & user match it collapses logs into 1 & you only see 1st one so you lose data in other logs like urls, process, etc.

consider disabling

https://www.ibm.com/support/pages/qradar-how-does-coalescing-work-qradar

#qradartips 6/N

Qradar Tip #7

reference sets are fast! it's like magic

want to search all 1000s of recent domain names from UrlHaus?

want to search 100s of Emotet C2 ip addresses all at once?

drop them all into a Reference Set & search

you will be surprised how fast it can be

#qradartips 7/N

Qradar Tip #8

the display drop down acts like a "group by"

its a super quick way to get a list of user

or a list of ip addresses

or a list of event names

you don't have to search->edit search, just use the display drop down instead

#qradartips 8/N

Qradar Tip #9

if you are not familiar with qradar

you may want to search by windows event ids such as 4688

problem is it seems dead slow event if indexed

a faster alernative is to find the QID (qradar id) instead

(continued)

#qradartips 9a/N

Qradar Tip #9

(continued) ... to find the QID a quick way i found is to click the "false positive" button

the QID will be displayed there

copy & paste it, return and search by QID instead of event id, the search will be indexed and much faster!

#qradartips 9b/N

Qradar Tip #10

performance tip

building new hunts/reports

don't search "last 24 hrs" as every time you update filters it'll re-runs the whole search due to the stop time changing

instead pick a start/end date so data is cached

this speeds up query building

#qradartips 10/N

Qradar Tip #11

if you need to search an unindexed field or use payload contains

1st prime it by running a larger indexed search against the same time frame

then re-run a 2nd search after adding your unindexed field

its actually faster to run 2 searches than 1!

#qradartips 11/N

Qradar Tip #12

if you want your offense to have pretty names such as Mitre Techniques

in rule response "dispatch a new event" with a the pretty name then under offense naming choose "should contribute" or "set or replace" the name

#qradartips 12/N

Qradar Tip #13

if you want qradar to only email you once a day per user for an offense

use the response limiter to configure that

there is a caveat however

if the response limiter is hit tip #12 will stop working and names gets ugly again

#qradartips 13/N

Qradar Tip #14

you probably knew that qradar does geo location if you hover over a remote ip

but did you know you can make it do the same thing for RFC 1918 internal private addresses?

just use the Country/Region drop down list on the Network Hierarchy screen

#qradartips 14/N

Qradar Tip #15

threat hunters will love using the QFlow feature

which allows you to display the first several bytes of requests & responses in the netflow / Network Hierarchy tab, real time packet viewing & even write alerts off them

https://www.ibm.com/support/knowledgecenter/SS42VS_7.3.3/com.ibm.qradar.doc/c_tuning_guide_deploy_qflow.html

#qradartips 15/N

Qradar Tip #16

did you know you can customize the right-click menu of some fields such as ip address

just modify the xml configuration on the qradar console

/opt/qradar/conf/ip_context_menu.xml

you can use the %IP% field for example to pass the IP address to other sites like Virus Total

https://www.ibm.com/support/knowledgecenter/SS42VS_7.3.3/com.ibm.qradar.doc/t_CUSTOMIZING_THE_RIGHT_CLICK_MENU.html

#qradartips 16/N

Qradar Tip #17

on log activity tab the default columns displayed aren't helpful

instead of going Search->Edit search every single time

you may want fields like URL, process name, etc. to show by default

Do this by editing the column layout, then his Save Criteria

leave it as real time (streaming) then check "Set as Default"

#qradartips 17/N

Qradar Tip #18

you may want a different column layout per objective

example: proxy layout, sysmon layour, antivirus layout

on search->edit search under column definition

choose your columns, enter a custom name and save

now it'll be in the drop down to choose each time

#qradartips 18/N

Qradar Tip #19

vendor app packs can give you solutions quickly

apps can give you pre-built custom fields, rules, and reports

they may require heavy tuning & performance considerations for your environment

but it could be a good starting point

#qradartips 19/N

Qradar Tip #20

log sources create their own fields

problem: u end up w/ multiple fields for same data

ex: proxy url, sysmon url, edr url, waf url

this creates a nightmare for SOC to search

tip: consolidate related custom fields into a single field like 'url'

#qradartips 20/N

Qradar Tip #21

sometimes it's easier in excel or notepad++

if you just want to dump the raw logs to excel

use Actions -> Export to CSV -> Full Export (all columns)

and find the column named 'payloadAsUTF'

now you have the raw logs to mess around with

#qradartips 21/N

Qradar Tip #22

worried about access control?

use the admin -> user roles to limit which screens users can access

#qradartips 22/N

Qradar Tip #23

want to control who sees which log sources?

use the admin -> security profiles

in conjunction with the log source groups screen

to restrict which users can access which log sources

#qradartips 23/N

Qradar Tip #24

have compliance requirements for log retention?

or have disk space/performance issues?

use the event retention screen to auto purge logs

based on whatever simple or complex query you want!

#qradartips 24/N

Qradar Tip #25

is your assets tab / profiler filled with complete junk?

use the asset profiler configuration -> manage identity exclusions

to exclude those invalid ip addresses, hostnames, mac addresses, etc.

based off saved searches

https://www.ibm.com/support/knowledgecenter/SSKMKU/com.ibm.qradar.doc/c_qradar_ug_asset_identity_exclusion.html

#qradartips 25/N

Qradar Tip #26

similar to reference sets, sometimes its nice to group certain log sources together for rules and hunts

try using 'log source groups' instead of reference sets or massive "or" statements

example: if a rule only applies to domain controllers, DHCP servers, or your IDS appliances

#qradartips 26/N

Qradar Tip #27

if you have a single system sending multiple log source types

ex: server sends windows events, sql logs, web logs, and app logs

you may notice incorrect parsing, web logs treated as sql, etc.

if so you may need to adjust the Log "Parsing Order"

#qradartips 27/N

Qradar Tip #28

qradar has a built-in rule wizard filter that allows you to monitor when a log source stopped sending logs

this can be helpful for example to monitor critical systems like DCs & get alerted immediately if broken

"event(s) have not been detected"

#qradartips 28/N

Qradar Tip #29

if a log source is in an ERROR or WARN state

clicking into the log will show a RED or YELLOW banner at top indicate the reason for the error

#qradartips 29/N

Qradar Tip #30

only want your alert to fire if something happens multiple times and when certain fields match or mismatch?

use one of the many rule wizard filters for many times with some fields the same and some fields different

#qradartips 30/N

Qradar Tip #31

did you create a dashboard but it defaults to some ugly table/bar chart?

switch to time series, check the "capture time series data" box

then be patient and wait (many hours sometimes) for the data to cache into the graph

now you can time scroll

#qradartips 31/N

Qradar Tip #32

logs coming in as Universal DSM w/ unknown name

unable to search by ip, port, or username?

add a log source extension or custom Dsm

it's just a bunch of regex in XML

it will allow you to parse out those critical fields and make them searchable

#qradartips 32/N

Qradar Tip #33

did you run a long slow search a few hours ago?

do you need the results again but don't want to wait to re-run it?

goto Search -> Manage Search Results

find your search for hours ago

click the "COMPLETED" link

the cached results return instantly!

#qradartips 33/N

Qradar Tip #34

did you make changes such as add a new user, add a log source, add a subnet

but the changes haven't taken effect yet and can't figure out why?

it may be waiting for you to "Deploy Changes" from the admin tab

#qradartips 34/N

Qradar Tip #35

did you know every time you create a dashboard time series or report it's essentially created a database table & storing the data somewhere

you can manage those tables/data including disabling or deleting by going to "Aggregated Data Management"

#qradartips 35/N

Qradar Tip #36

historical quirk to be aware of

i've seen issues with deleting an admin user

if they were the owner of a critical dashboard, rule, report, etc. it could break it

so at least initially its safer to "Disable" a termed user instead of "Delete"

#qradartips 36/N

Qradar Tip #37

are you trying to manipulate the Magnitude to adjust queue priorities?

i don't have good news for your here

"Magnitude calculations are proprietary to QRadar"

using the Magnitude adjustment rules & fields don't seem to make much impact

we use our SOAR platform to prioritize instead

https://www.ibm.com/mysupport/s/question/0D50z00006PEFAuCAP/how-magnitude-calculation-works?language=en_US

#qradartips 37/N

Qradar Tip #38

be careful after applying updates, DSM or app pack updates, etc.

qradar like to change regexes or disable your custom events properties

when that happens your rules break

monitor the 'last modified date' on custom event properties to find these anomalies

#qradartips 38/N

Qradar Tip #39

qradar actually audits itself!

you can write reports and rules to monitor qradar itself

such as who performed a search, who logged in, who added a user, etc.

#qradartips 39/N

Qradar Tip #40

performance tip

avoid custom event properties for an entire log source type

instead be specific, choose a event name or category for each custom event property to avoid parsing overhead

#qradartips 40/N

Qradar Tip #41

qradar log sources like to break together in groups

a good example is all qradar JDBC connections dropping at once during an update

use the log sources screen and sort by Last Event Time to look for such anomalies

#qradartips 41/N

Qradar Tip #42

getting crushed by EPS, FPS, cpu, or licensing issues?

use the log activity tab, group by log source type, log source, and event name to see the noisest log sources

such as a linux server set to debug syslogging

and start trimming your EPS

#qradartips 42/N

Qradar Tip #43

you can customize the offense close reasons for reporting/metrics purposes

under the actions->close

click the little edit icon (paper & pencil)

#qradartips 43/N

Qradar Tip #44

do you get emailed a report daily?

do you need an a copy of that report from several days ago but it's no longer in your inbox?

you can go to the reports screens, pull down the generated reports drop down, and find past reports to download

#qradartips 44/N

Qradar Tip #45

is your asset tab important & you can't lose the data?

consider occasionally exporting data (you can re-import later) or do db backups

many times during system/support issues i've seen the assets database get purged and start from scratch

#qradartips 45/N

Qradar Tip #46

event and flow direction are wonderful fields that allow you to identify if traffic is leaving the company, coming in, or traversing laterally based on your network hierarchy

L2L (local to local), L2R (local to remote), R2L (remote to local)

#qradartips 46/N

Qradar Tip #47

in AQL be careful, the like/ilike wildcard is a percent sign (%) , NOT AN ASTERICK!

ilike '%badstuff%'

#qradartips 47/N

Qradar Tip #48

in AQL, 'like' and 'matches' are case sensitive

'ilike' and 'imatches' are case insensitive

ilike '%badstuff%'

imatches '.*(bad|good).*'

#qradartips 48/N

Qradar Tip #49

in AQL more of a regex reminder

but if you're looking for ends with '.exe' for example

if you're using 'ilike' , then do not escape the period

ilike '%.exe'

if you are using imatches then yes you must escape the period

imatches '.*\.exe'

#qradartips 49/N

Qradar Tip #50

in AQL the "in" keyword is nice and saves you a ton of "or" statements

Method in ('POST', 'GET', 'PUT')

#qradartips 50/N

Qradar Tip #51

single tick vs double quote

double quotes go around custom fields/properties like "URL" and "User Agent"

you can avoid double quotes if the custom field has no spaces in it

single ticks go around string constants like 'PUT', 'POST', 'GET' or renaming a column like 'Total'

#qradartips 51/N

Qradar Tip #52

in AQL, N/A = null !

a common mistake i see is people trying to do

username = 'N/A'

that won't work, you must do

username is null

#qradartips 52/N

Qradar Tip #53

AQL tip

sometimes logs/parsing are messed up & for example sourceip = destinationip

include/exclude these anomalies by comparing 2 different fields in AQL!

(you can't do that in the GUI rule wizard or add filter wizard)

sourceip != destinationip

#qradartips 53/N

#qradartips #54

a qradar regex / rule gotcha

qradar seems to strip out certain special characters such as a plus ('+') sign probably to prevent injection attacks

a side effect this has is if you write a regex in a rule with a plus sign

it looks like it disappears (even though it's actually there and the rule works)

#qradartips 54/N

Qradar Tip #55

AQL big performance tip

normally in the GUI if you edit your search it gives you a popup & cancels your 1st search

in AQL, if you hit search, edit your search, hit search again, edit your search, hit search

you just launched multiple searches in parallel!

in AQL hit cancel BEFORE editing your search!

#qradartips 55/N

Qradar Tip #56

AQL performance tip

i prefer where clauses with QID/devicetype/logsourceid over qidname(), logsourcename(), logsourcetypename()

performance seems many times better, especially if you are used to using like/matches

i memorize/have index of the ID numbers and just use them

(continued)

#qradartips 56a/N

Qradar Tip #56

(continued)

if i don't know the qid/devicetype/logsourceid but i need it

i again find it faster to run 2 searches than 1

i 1st run a short (last 2 minutes) search to get the ids

then i run a 2nd search using the ids i found

#qradartips 56b/N

Qradar Tip #57

AQL performance tip

my starting point in almost every search is devicetype (aka log source type)

if i need 4688 process creates by nature that search is slow cause it'd search all events (Linux, Firewall, Windows events)

so narrow your search down to the correct devicetype first

#qradartips 57/N

Qradar Tip #58

Dashboard tip

tired of clicking "View log activity" to drill into the details of a graph?

use AQL to build the query w/ CONCAT that shows all the data you need!

select concat(computer, ', ', username, ', ', url) as 'ComputerUsernameSite'

#qradartips 58/N

Qradar Tip #59

AQL tip

count is powerful to indicate how many times something happened

but UNIQUECOUNT() is even better!

it can tell you how many unique users, or ips, processes, or urls were seen

#qradartips 59/N

Qradar Tip #60

Qradar stores multiple time fields

starttime = time qradar received the log

endtime (aka Storage Time) = time qradar finished processing it

devicetime (aka Log Source Time) = time from the actual log

(continued)

#qradartips 60a/N

Qradar Tip #60

(continued)

the time stamps are in integer format by default

you can sort them as normal using 'order by' and 'asc' or 'desc'

you can format them to display pretty with

dateformat(<field>, 'yyyy-MM-dd hh:mm a')

(continued)

#qradartips 60b/N

Qradar Tip #60

(continued)

most important is devicetime (when event actually logged)

unfortunately qradar indexes "last 2 hours" & "start/stop" on the starttime field

tip: compare devicetime vs starttime or starttime vs endtime to find performance issues!

#qradartips 60c/N

Qradar Tip #61

you can use the ever so powerful reference sets in AQL also!

REFERENCESETCONTAINS('reference set name', sourceip)

#qradartips 61/N

Qradar Tip #62

in AQL don't forget if aggregating (COUNT, SUM) to include your "group by" just like any SQL-like language

otherwise query will run but return unexpected results

select sourceip, UNIQUECOUNT(username) as 'Users' from events group by sourceip

#qradartips 62/N

Qradar Tip #63

sometimes certain fields are stupid long, perhaps legitimately or perhaps due to a parsing issue

you can use the STRLEN() function filter out or eliminate those long noisy fields

#qradartips 63/N

Qradar Tip #64

AQL tip

if you have your network hierarchy admin page configured

you can start searching for odd lateral movement

use the FULLNETWORKNAME() function against ip addresses

such as below seeing a workstation talking to a mobile device

#qradartips 64/N

Qradar Tip #65

AQL tip

you can search for odd connections to countries you don't do business in

use the sourcegeographiclocation and destinationgeographiclocation fields

#qradartips 65/N

Qradar Tip #66

if you expand the "Current Statistics" link on the log screen

it shows interesting stats like how many records were returned, how much data (MB or GB) were searched, and how long it took

#qradartips 66/N

Qradar Tip #67

my plug for AQL. learn it!

Search -> Edit Search is a slow nightmare

anytime you change column layout, add/remove column, sort by, etc. you get full screen refresh

just learn AQL!

adjust columns, sort/filter w/out leaving the screen!

#qradartips 67/N

Qradar Tip #68

don't forget Qradar has a full API available

just append "/api_doc" to the end of your qradar url instance

start reading up on it

then move to writing your python scripts and get out of the GUI completely

#qradartips 68/N

Qradar Tip #69

you can execute sample API requests right from the GUI

and learn what the CURL statements would look like, etc.

just append '/api_doc' to the end of your url again and drill into the different areas

#qradartips 69/N

Qradar Tip #70

manage who or what can access your API with the "Authorized Services" admin screen

#qradartips 70/N

If you got this far and read all tips, thank you!

I hope this helps you, my #infosec friends, to improve your experience with #ibm #qradar #siem

#qradartips

Tuesday, September 15, 2020

How we use Agile Scrum for SIEM Detection Engineering and Threat Hunting

Friday, January 15, 2016

QRadar SIEM API call for Offenses Assigned to User

After you have the default sample api calls working, just download my pythong script for assigned_to.py and put it in the same folder. The run it as follows.

# Offenses Assigned to Myself

> offenses/assigned_to.py -u MYUSERID

id:128 [MYUSERID] SrcIP=66.66.220.109

id:127 [MYUSERID] SrcIP=172.16.17.2

id:126 [MYUSERID] DstIP=61.61.61.33

id:125 [MYUSERID] DstIP=61.61.61.57

id:124 [MYUSERID] DstIP=10.0.0.2

# Offenses Not assigned to anybody yet

> offenses/assigned_to.py -u UNASSIGNED

id:133 [ ] SrcIP=190.190.117.177

id:132 [ ] User =USER22

id:131 [ ] SrcIP=66.66.103.118

More about neonprimetime

Top Blogs of all-time

- pagerank botnet sql injection walk-thru

- php injection ali.txt walk-thru

- php injection exfil walk-thru

Copyright © 2015, this post cannot be reproduced or retransmitted in any form without reference to the original post.

QRadar SIEM API 101 Walk-Through

I downloaded the sample API python modules (RestApiClient.py, SampleUtilities.py, etc.) from github

I downloaded the sample API script (01_GetOffenses.py) from github

I saved them all to the same folder.

I made sure I had python3 installed (not 2).

Then I had to download our console website PEM from the certificate like so and save it to the same folder.

Then I had to create an authorized service/token.

Then run the script via

python 01_GetOffenses.py

It will prompt you to enter your authorization token (from the authorized service screen above) and your certificate location (copy the full path to the .crt file). Once you hit enter, you have the choice to save this token and certificate information to a plaintext file for future use. But then the API call runs and boom you have a list of all offenses!

More about neonprimetime

Top Blogs of all-time

- pagerank botnet sql injection walk-thru

- php injection ali.txt walk-thru

- php injection exfil walk-thru

Copyright © 2015, this post cannot be reproduced or retransmitted in any form without reference to the original post.

Wednesday, March 11, 2015

QRadar Custom Right-Click Menu for IP Addresses

1.) Open ip_context_menu.xml with an editor like 'nano' (Likely located in /opt/qradar/conf)

2.) Add the following line

<contextMenu>

<menuEntry name="ARIN Lookup" url="http://whois.arin.net/rest/ip/%IP%" />

</contextMenu>

3.) Restart the tomcat service (Admin -> Advanced -> Restart Web Server)

4.) Enjoy the results!

Copyright © 2015, this post cannot be reproduced or retransmitted in any form without reference to the original post.

Monday, December 22, 2014

QRadar SIEM 101: Find Expensive Custom Rules

[xxx@yyy support]# ./findExpensiveCustomRules.sh

NOTE: This is typically found in /opt/qradar/support/

It'll then tell you where it stored the data

Data can be found in ./CustomRule-xxxx-xx-xx-yyyyyyy.tar.gz

Then you can extract that folder and view the contents

[xxx@yyy support]# tar -zxvf CustomRule-xxxx-xx-xx-yyyyyyy.tar.gz

Good luck parsing through the data! At a high level, I think the top folder's txt/xml file tries to summarize it all. So look in there for the first rule that shows up that is one you wrote. If you need more details, go into the reports folder and do the same thing on each of those files.

AverageActionsTime-xxxx-xx-xx-yyyyyyy.report

AverageExecutionTime-xxxx-xx-xx-yyyyyyy.report

AverageResponseTime-xxxx-xx-xx-yyyyyyy.report

AverageTestTime-xxxx-xx-xx-yyyyyyy.report

MaximumResponseTime-xxxx-xx-xx-yyyyyyy.report

MaximumTestTime-xxxx-xx-xx-yyyyyyy.report

MaximumActionsTime-xxxx-xx-xx-yyyyyyy.report

MaximumExecutionTime-xxxx-xx-xx-yyyyyyy.report

TotalResponseCount-xxxx-xx-xx-yyyyyyy.report

TotalResponseTime-xxxx-xx-xx-yyyyyyy.report

TotalActionsCount-xxxx-xx-xx-yyyyyyy.report

TotalTestCount-xxxx-xx-xx-yyyyyyy.report

TotalActionsTime-xxxx-xx-xx-yyyyyyy.report

TotalTestTime-xxxx-xx-xx-yyyyyyy.report

TotalExecutionCount-xxxx-xx-xx-yyyyyyy.report

TotalExecutionTime-xxxx-xx-xx-yyyyyyy.report

Copyright © 2014, this post cannot be reproduced or retransmitted in any form without reference to the original post.

QRadar SIEM 101: Reference Maps

At the console command prompt the first thing you must do is create a Reference Map.

[xxx@yyy ~]# ./ReferenceDataUtil.sh create yourReferenceMapName MAP ALNIC

NOTE: ALNIC = Alphanumeric data that ignores case. You can replace this with ALN (case sensitive), or IP (for IPs), or NUM (for numbers)

NOTE: The shell script is typically found in this location: /opt/qradar/bin/ReferenceDataUtil.sh

Next you can create a CSV file that you'll use to import the data.

key1,data userid1,WorkstationName1 userid2,WorkstationName1 userid2,WorkstationName2 userid3,WorkstationName3 userid4,WorkstationName4

NOTE: The first row is the column headers, just keep them as literally the words key1,data

Then you import/load that file into the Reference Map.

[xxx@yyy ~]# ./ReferenceDataUtil.sh load yourReferenceMapName ~/myfile.csv

Then to validate it worked you can print them all out

[xxx@yyy ~]# ./ReferenceDataUtil.sh list yourReferenceMapName displayContents

If you goofed, first delete, then re-add

[xxx@yyy ~]# ./ReferenceDataUtil.sh delete yourReferenceMapName WorkstationName1 userid1 Key1

[xxx@yyy ~]# ./ReferenceDataUtil.sh add yourReferenceMapName WorkstationName1a userid1 Key1

If you really goofed, purge them all and start over

[xxx@yyy ~]# ./ReferenceDataUtil.sh purge yourReferenceMapName

If you really really goofed, delete the whole Reference Map

[xxx@yyy ~]# ./ReferenceDataUtil.sh remove yourReferenceMapName

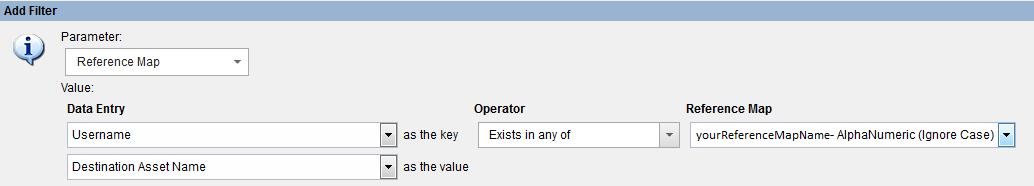

If all is well, and you created it successfully, you can goto the GUI and add a filter with it

Copyright © 2014, this post cannot be reproduced or retransmitted in any form without reference to the original post.